The AI regression testing strategy you’ll wish you had last release

Dmitry Reznik

Chief Product Officer

Aug 22, 2025

Updated on Oct 8, 2025

Summarize with:

You patch a failing test, and three more break. Your test coverage is wide but shallow, has duplicated logic, outdated assertions, and flaky results. And every time you hit “release,” you just silently hope that you won’t bomb far country accidentally.

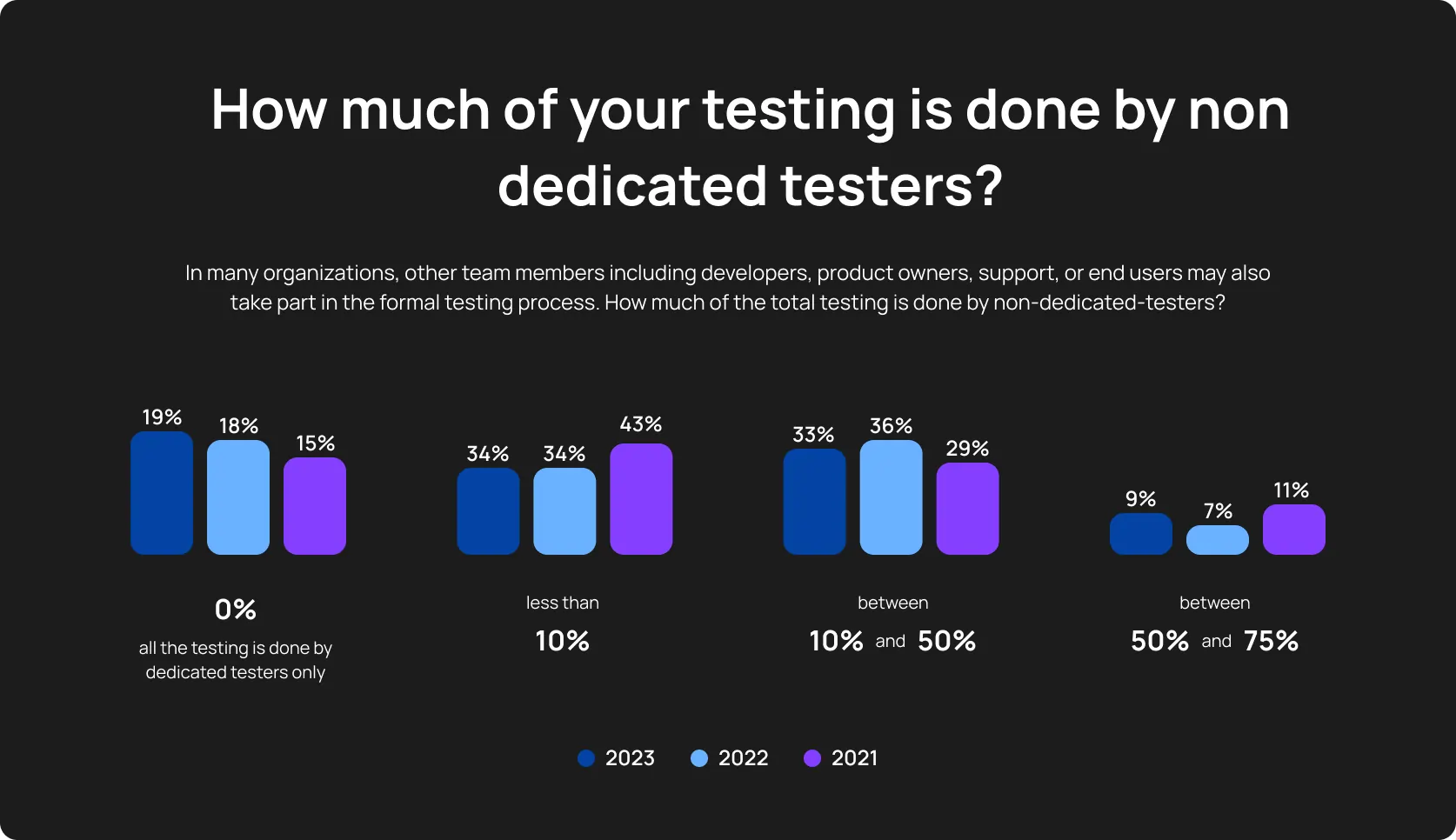

And you’re not alone. 67% of QA teams stated that up to 50% of their testing relies on non-dedicated testers. Quite inconsistent and prolongs cycles. But wait, regression testing is supposed to protect your velocity actually.

Quick-on-the-uptake teams are turning to AI regression testing, a smarter, more stable, and dramatically faster way to validate code without the overhead of traditional scripting.

But don’t blame us from the outset, we don’t propagate replacing humans. We are into eliminating the wasteful layers of manual effort.

Further, we’ll break down the regression testing strategy that modern QA teams are using to move fast and test smart:

- How to spot the more-harm-than-good regression process?

- How exactly artificial intelligence improves coverage, resilience, and feedback speed

- The step-by-step blueprint for implementing AI-led regression testing

- And how tools like OwlityAI make this transformation feasible and effective

If you’ve ever wished your regression suite “just worked,” this is the strategy you’ll wish you’d adopted in the last release.

Why regression testing needs a reboot

Regression testing and AI together are meant to give teams confidence in every release. Instead, it’s become a time sink, full of flaky scripts, false alarms, and skipped runs that pile up QA debt.

Too many flaky tests

One small UI change, and half your tests crash. Without AI in regression testing for QA teams, engineers waste hours debugging outdated locators and struggling to meet release deadlines.

Automation testers copy-paste scripts that bloat suites with redundant checks. Tests fail for no clear reason, and CTOs face the fallout: delayed features and frustrated customers. Worse, time-crunched teams skip regression runs entirely, eventually creating buggy prod.

The real cost of bad regression testing

83% of tech professionals (including QA and dev teams) reported burnout. You may ask what this has to do with AI in regression testing and overall QA efficiency.

And we answer: last-minute fixes, overworking, stress… all these factors contribute to reputational damage and to the team’s weariness. And as a tech leader, you should take this into account when calculating the real cost of bad testing.

Because your stakes are higher: without AI for regression testing, each delayed release compounds QA debt, spikes costs, and risks market share.

One old report by Tricentis found that downtime has increased by 10% in only one year, with a bottom line of over 200 years. And the total loss from software failures was 1+ trillion. Yes, this may seem water under the bridge since we are in 2025 now, but still:

- Flaky pipelines trigger false alarms or hide real regressions

- Release delays as teams re-run tests or manually inspect failures

- Developer and QA fatigue as testing becomes a chore

And people, people burn out.

What AI brings to regression testing

AI in regression testing changes the most impactful aspect of your SDLC. It’s not a framework, it’s not executors, and it’s not a tool. AI changes your mindset: how, when, and why you test.

Smart prioritization

Instead of running the entire suite, AI analyzes recent code changes and flags the riskiest areas. You get targeted test runs through AI and regression testing, without wasted CPU cycles. Example: after a backend refactor, only impacted flows are re-tested first; no need to touch untouched modules.

Self-healing tests

You don’t need to manually monitor and check all UI/API changes. If a login button moves from id="submit" to class="btn-login", OwlityAI and similar tools adjust the script and save QA team hours of manual rework.

Ongoing test scenario generation

You plan to ship a new feature, so you need to strategize your testing beforehand. This is how it was before. With autonomous testing tools, this becomes much easier. The tool constantly scans the app structure, user flows, and previous logs, and generates relevant test cases.

Failure analysis

The tool that provides just “test failed” outputs is useless. Because for informed decision-making, you need context: When the bug popped up, why it occurred, is this a recurrent error, is this a real defect at all, or just a timeout? AI classifies flaky vs. legitimate failures.

Maintenance cut by design

AI handles script updates, test syncing, and flaky reruns in the background. Instead of the entire QA team, you need a single manager to oversee the tool’s performance and adjust your testing strategy.

5 steps to the AI regression testing strategy

A smart regression testing strategy is about testing the right things, in the right way, at the right time. QA engineers, DevOps architects, and CTOs, this is your step-by-step guide on how to implement modern and effective AI regression testing.

Step 1: Prioritize the regression scope

It may sound seditious, but do not run the entire batch of tests every time. It’s unnecessary. Better focus on:

- High-traffic user flows

- Recent commit zones (the modules you’ve changed in the last 3-5 merges)

- Areas where many defects occurred

Key point: The 2024 State of DevOps Report found that about 20% of an app’s codebase causes the lion’s share of production bugs.

Example for SaaS: What is a critical path in the average SaaS? The subscription funnel is more than the expected answer. Implementing AI regression testing, including checking this path, they can cut regression scope by one-third and catch ~95% of all defects at the same time.

So, don’t re-test static or low-impact modules, prioritize smart.

Step 2: Collect previous data and train AI

Teach your AI testing tool what changes matter: feed it past test runs, CI logs, failures, and code diffs. The type and quality of your data directly impact how effectively AI for regression testing prioritizes what to test and when.

- How precisely the tool will prioritize what to test and when

- Which tests the tool will recognize as broken

- Whether the tool’s forecasting will work or not

Pro tip: Ensure your previous test logs, apart from pass/fail rates, include execution times and defect details. This makes training more accurate and effective.

Step 3: Hand over test generation and maintenance to the AI tool

Don’t write from scratch, ensure these three steps instead:

- Insert the recent build or URL to the tool

- It should scan and “tie” to UI objects’ locations

- The AI tool will generate test cases based on your data and expected behavior patterns

Context makes such an optimization a real AI test automation.

Step 4: Integrate it into CI/CD

Connect the regression test suite to your pipeline, but don’t run the entire suite per commit. Pivot this way:

- Every commit: Run high-priority tests, that’s really important

- Daily or pre-release: Run the full suite; you can schedule it for your convenience

- Outcome: You find failures on high-risk module regressions quickly

OwlityAI integrates into GitHub Actions, GitLab, Jenkins via API and runs tests simultaneously.

Step 5: Monitor trends

Usually, QA engineers limit themselves to failures as the most impactful part of their job. But there are dashboards for this reason. You get a clear-cut explanation of the following:

- Which tests consistently fail

- Which areas produce clustered failures

- Where and why the coverage is declining

Even with AI in regression testing, creativity and context understanding remain irreplaceable parts of a QA job.

What metrics to consider important and monitor constantly

It’s time to comprehend whether you are leveraging the most out of AI for QA and size up options for improvement. These five metrics you really want to track, then:

1. Time saved per regression cycle

Measure the time you spent previously running the entire suite. Then, do the same for AI-prioritized testing. Finally, compare the delta between them.

Pro tip: Use CI/CD timestamps to calculate mean runtime savings. Target 40-70% reductions; it’s a common benchmark.

2. Test coverage vs. risk-weighted priority

The coverage itself is similar to a horse in a vacuum: you can’t consider it out of effectiveness and other context. Track coverage of critical paths and recently changed components.

Compare test hits to commit differences in business-critical flows (payment, onboarding, etc.).

3. Percentage of flaky tests

When UI changes break workflow, you are not about to progress fast (as well as not about to gain trust among users). To avoid this:

- Monitor how often you re-run tests

- Check the number of tests marked as unstable

- Understand root causes: Which elements cause the most problems: locators, network lag, race conditions, whatever.

4. QA effort per release

To avoid team burnout, it’s important to notice what tasks take up the most time and effort (and often don’t have a real sense or impact). Start with these areas:

- Test maintenance

- Manual reruns

- Failure triage

Target ~45% decrease in QA cycle time. You can reallocate free time to self-development (or at least to exploratory or performance testing).

5. Bugs in production

This is the ultimate regression metric. Compare bug counts from production monitoring (Sentry, Datadog) vs. pre-release issues found in the test.

Target at least a 30% reduction after implementing AI-driven regression testing.

How OwlityAI supports smarter regression testing

OwlityAI accelerates what slows regression down and automates the parts that cause the most delays and problems. Here are a couple of features:

- Autonomous scanning of UIs to detect changes and generate updated test scripts

- Self-healing for selectors when the DOM or API changes

- Test prioritization via real change data to rank critical test paths

- Simultaneous cloud execution with thread doubling (up to 5x speed)

- Flaky test detection and resolution built-in

- CI/CD pipeline integration via API

- Real-time bug reporting exported directly to your PM tools

Check our calculator to see how much time and money AI in regression testing for QA teams can save with OwlityAI.

Bottom line

What distinguishes AI and regression testing strategies from outdated manual approaches? Not AI, really. Not even the speed of the cycle.

Rather, the number of bugs in production and the number of your team members taking PTO after every release due to burnout.

That’s why you need to upgrade to AI regression testing, not because of hype.

Instant integration to CI/CD pipeline, faster broken tests detection, only relevant test suites, insightful reports… If you are ready to complete this list with your solved problems and gained advantages, drop us a line to book a demo.

FAQ

1. What problems does AI solve in regression testing that traditional automation can’t?

AI detects hidden dependencies between code changes and test failures, automatically prioritizing tests that matter most. Unlike rule-based scripts, it can identify risky areas, self-heal broken locators, and adapt to evolving UIs without manual updates.

2. How accurate is AI in predicting regression risks?

Modern AI-driven testing tools can reach over 85–90% accuracy in identifying regression-prone areas when trained on historical test data, logs, and code diffs. The precision improves with every test cycle as the model learns from real project patterns.

3. Is AI regression testing suitable for small QA teams?

Yes. AI in regression testing for QA teams with limited manpower helps automate repetitive validation, cut maintenance time, and focus efforts on exploratory or performance testing instead of manual regression runs.

4. How much setup time does AI regression testing require?

Initial setup typically takes a few hours to a couple of days, depending on your CI/CD maturity and data volume. Once integrated, test generation, prioritization, and healing become continuous and autonomous.

5. Can AI detect flaky tests automatically?

Yes. AI models analyze failure patterns, log history, and environment data to distinguish flaky tests from real bugs, reducing false alarms and wasted debugging time.

6. How does AI regression testing impact release frequency?

Teams using AI regression testing tools often report 30–70% faster regression cycles. This directly supports more frequent, stable releases without increasing QA workload.

7. What are the data requirements for training an AI regression model?

You’ll need historical CI logs, test results, code commits, and defect reports. The richer and cleaner the dataset, the more accurately AI can forecast potential regressions and prioritize test coverage.

8. Does AI regression testing replace QA engineers?

No. AI eliminates repetitive work, not human judgment. QA engineers remain essential for defining scenarios, validating complex logic, and interpreting business impact — areas AI still can’t replicate.

9. Which metrics show that AI regression testing is working?

Key indicators include reduced test maintenance time, fewer flaky failures, faster regression cycles, higher coverage of critical paths, and a measurable drop in production bugs after releases.

10. What’s the best AI regression testing tool for CI/CD pipelines?

Top platforms include OwlityAI, Functionize, Testim, and Mabl. The best choice depends on your stack, integration needs, and whether you prioritize self-healing, test generation, or analytics.

Monthly testing & QA content in your inbox

Get the latest product updates, news, and customer stories delivered directly to your inbox