What you need to change in your team before AI testing can work

Dmitry Reznik

Chief Product Officer

Summarize with:

Here is a fresh study of the joint group from the UK and the US. Developers in a Java-based continuous integration team spend around 1.28% of their monthly hours fixing flaky tests. In money equivalent, that’s USD 2,250 per month per project.

Let’s lock that in — money drained.

Now, running further. The 2023 Future of Quality Assurance study states that 77.7% of testers use AI tools, yet 73.8% still run full automated suites without risk-based prioritization. Diagnostics happen in outdated loops. Without measurement that reflects adaptive behaviour, the investment in AI testing tools goes down the drain.

Let’s put that down, too.

Genuine QA transformation requires shifting the mindset and typical practices. Without sprint-level planning, you have brittle automation and will still keep scripting pipelines. The road to nowhere, indeed.

This article gets you a simple and actionable checklist to assess your AI testing readiness:

- The reason why “next-gen-super-alpha” testing fails in unprepared teams

- What exactly you need to change to make AI deliver

- The mentioned checklist for a quick, high-level assessment

Intelligent automation in QA is not as far as you think. It begins with the following section (or by the button below).

Why AI testing fails in unprepared teams

Buddhist wisdom says: don’t build expectations, and you won’t be disappointed. And this is quite applicable to software testing. You should outline what you want (and expect), but don’t set unrealistic goals.

The 2024 Global DevSecOps Report by GitLab: 78% of teams had adopted AI tools, but 41% and 46% respectively plan to improve delivery efficiency and optimize DevOps processes.

Misaligned expectations

We drag this idea through our entire blog — at the moment, you can’t achieve full automation without fine‑tuning or context.

For example, if a team expects AI to instantly handle complex UI tests without defining clear objectives or refining test data, they’ll drown in irrelevant results.

This is your task, as a tech leader, to realign expectations and position AI as an amplifier for human expertise. Otherwise, AI will become a bulk processing engine, rather than a strategic partner.

Old QA habits

We are living in an unprecedented time. AI agents search the web to book a bungalow for your family trip, while home robot cleaning the house and your fridge ordering grocery list.

Paradoxically, at the same time, many teams still follow waterfall QA models or insist on scripted test updates before code changes. We can somehow understand and accept the immobility of large corporations. But US SMBs and even more small businesses…

How can we prepare for AI in QA if 26% of teams don’t know how to measure their automation effort and even don’t plan for what software testing should look like in just 1-5 years?

Skill gaps

The State of Software Quality Report 2025 revealed that 41% of management participants reported a lack of testing staff with AI confidence or data literacy.

While a lot of companies constantly use AI tools and even notice the benefit from this, they can’t feed historical logs, configure test heuristics, or interpret AI-generated suggestions, and – which is surprising – 56% still can’t keep up with demands.

No shared ownership

You might think that when everyone is responsible for everything, no one is responsible for anything. But there is a slightly different situation with QA transformation.

Create pipelines where QA, development, and operations collaborate and are in charge of the end product. Here, it is a well-tested and well-working app.

Why this is important: Artificial intelligence creates a single space for techies of all types: devs, testers, etc. Dashboards, continuous integration, and other features enable faster collaboration, and in case your team is used to siloed work, the system just won’t run.

What your team needs to change before AI testing can work

Obviously, the first thing is to assess your AI testing readiness. Below are the key steps you should complete after that.

Shift from task‑based QA to risk‑insight QA

We’ve already agreed that “test executed” is not the best option to gauge the effectiveness of software testing methodologies. But high-risk flow validation allows QA to move from running the same old regressions to focusing on user-impact zones.

A QA manager can use AI to check if the code contains especially high-risk areas. This is not the execution in a classic paradigm, but prevention. CTOs must empower QA to own the quality strategy, though.

Build cross‑functional ownership

From now on, not only the Head of QA is responsible for the product’s quality. Developers, Ops, everyone who is related to your product is.

DevOps needs to monitor auto‑healing test failures, QA needs to review release‑critical flows flagged by AI, product teams should update acceptance criteria when flow changes.

Set up a cross-team war room where QA feeds AI insights directly into sprint planning with Dev and deployment tweaks with Ops.

Accept AI is also learning

Many teams give up AI testing tools because they don’t see impressive results in the first sprint/release. And, hence, they don’t see them at all, because they didn’t give AI a chance to progress in its knowledge.

Artificial intelligence is a learning system. Give it weed-out data, set the flow, tune some settings or features during the pilot, and watch magic happening.

Eventually, the initially “weak” or “raw” tool generates, self-heals, and reprioritizes based on real runtime data. Treat it as a junior tester who suggests tests and cases and learns from developers’ feedback. Give it some time, and you’ll see improvement.

Train for AI‑augmented testing

QA engineers should develop skills in data analysis, log interpretation, and feedback loop calibration. Sam Altman, the OpenAI CEO, said that he doesn’t consider code development as important skill as continuous learning and adaptability.

Now, it’s AI fluency. What will it be in the next 5-10 years? Nobody knows, but we should be prepared.

Rethink success metrics

Many QA metrics are now obsolete. Self‑healing rate, AI-generated coverage against flow, false-positive ratio, time-to-triage, bug-detection velocity. These metrics show what you actually want to know — whether the AI tool delivers or not.

A practical AI testing readiness checklist

Don’t reinvent the wheel…because modern AI test automation strategy is not about driving but more about flying. Weird metaphor, but still. Run through the checklist below to assess your AI testing readiness in all dimensions: mindset, ownership, integration, strategy, and adaptability.

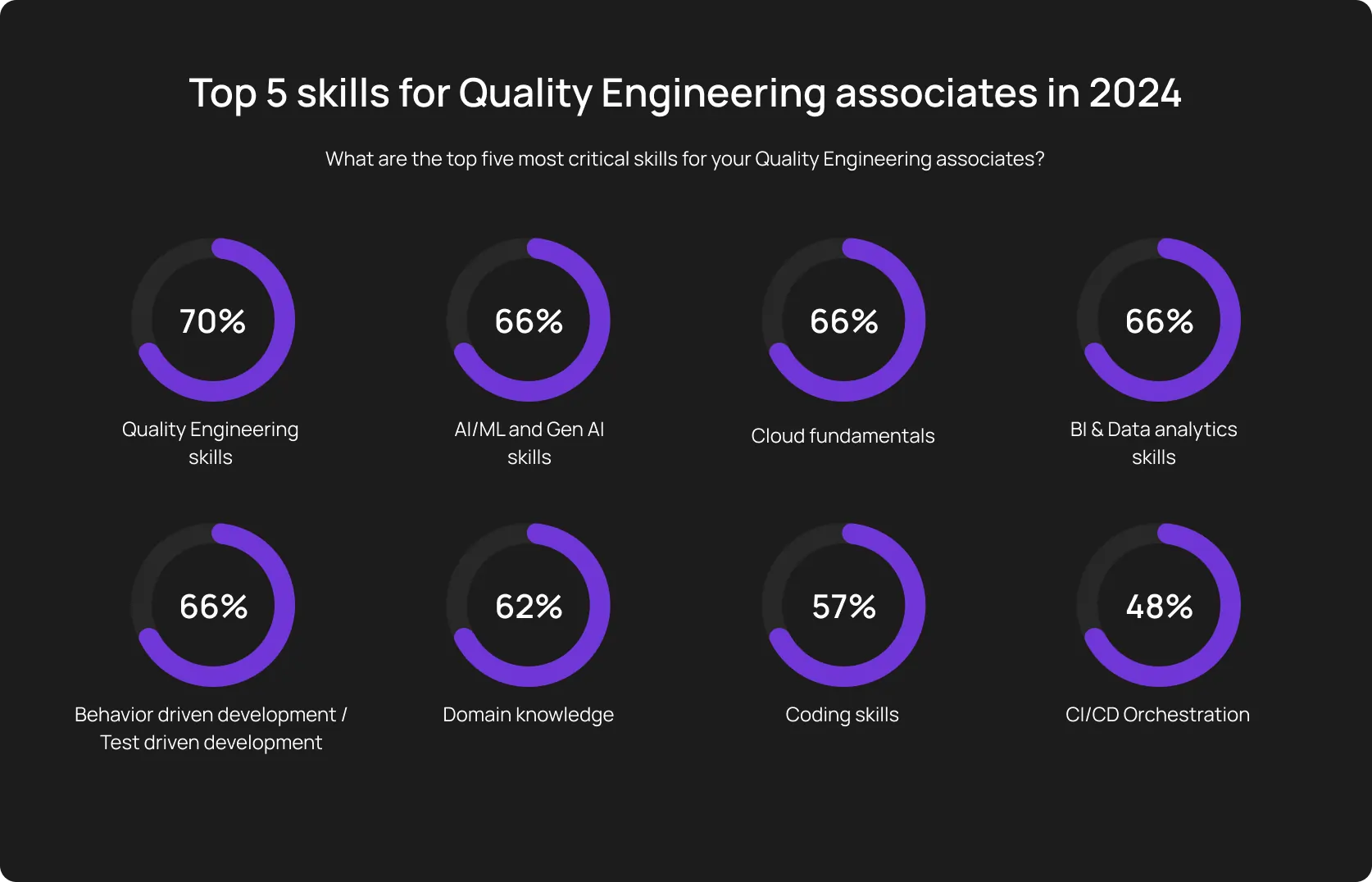

Why this matters: 66% of those surveyed by Capgemini cite AI/ML and GenAI skills among the top 5 critical skills in their work. At the same time, 53% QA Engineers still lack the required skillset.

AI testing tools aren’t a silver bullet by default. Successful rollout depends on alignment across roles, pipelines, strategy, and mindset.

How OwlityAI supports teams transitioning to AI testing

At least, our tool ensures your team doesn’t get shocked. This is the casual take. If we dive deeper, it immerses QA managers, DevOps teams, and CTOs into modern testing with minimal friction and maximum impact. They can roll out the tool’s capabilities gradually and measurably without breaking your typical workflows.

Autonomous work with human-in-the-loop validation

Typical OwlityAI’s flow: Constant crawling via computer vision → auto‑generating test cases → hands them to QA for approval.

A so-called “test‑buddy approach” empowers pilots before full automation is enabled. Once you are confident enough, you add more features: Autonomous check after each code change → Recreating brittle tests and removing irrelevant ones → Running new suite → Double-checking the results → Getting human operators to know the status with an actionable list.

Minimal disruption with no‑code onboarding

A fresh Capgemini report reveals that there is 0% who doesn’t have a dedicated QA team in the project. But let’s face it, there are a lot of them, they just don’t participate in such surveys.

Too small to have a dedicated QA Engineer? No problem, as with OwlityAI, there is no need to know the UI code and have QA experience at all. Paste your app’s URL, and OwlityAI begins discovering flows and generating tests. That’s simple.

Adoption across test layers

Enable modules one by one:

- Run a functional scan

- Approve auto-test creation

- Enable auto-healing maintenance

- Launch CI/CD integration

- Turn on reporting dashboards

Every feature roll-out is optional and reversible. Get first wins, then scale up.

Built-in KPI tracking and reporting

OwlityAI allows tracking:

- Self‑healing rate per sprint

- AI-generated coverage mapped to business flows

- QA time saved vs baseline

You’ll like the dashboards with role-based views (engineer vs exec) and visualized impact without.

Bottom line

We are living in a time when even small businesses think through an AI test automation strategy. But when we are talking about enterprises, we imply extensive QA teams, a lot of data (for AI model training), and impressive budgets.

This is why many SMBs and small companies think they are too small fish in this pond for QA transformation.

OwlityAI flops this thought upside down with a plain, user-friendly interface, at least 10 helpful features for SMBs, and extremely flexible plans.

To check your AI testing readiness, book a free 30-min call with our team.

Monthly testing & QA content in your inbox

Get the latest product updates, news, and customer stories delivered directly to your inbox