How to budget for AI test automation in your next release cycle?

Dmitry Reznik

Chief Product Officer

Summarize with:

“AI is transforming test automation”, “AI speeds up software development”, “AI this, AI that”... But why does no one talk about AI testing ROI?

Or even if talks, doesn’t include critical benefits, features, and deliverables in the calculation?

TechRadar published a recent finding: as organizations rush to deploy AI-driven development workflows, nearly half reported annual losses exceeding USD 1 million due to poor software quality and automation inefficiencies. Two-thirds expect a high risk of outages as speed overtakes oversight.

You might think now, “Well, this is the real cost of AI QA.” And your suspicions partially have ground: teams either underestimate the overhead of adopting AI testing or overestimate the savings. They overuse the budget, delay releases, and miss ROI.

We believe that modern SMBs can avoid such failures and achieve way better results if prepared properly.

Below is a practical framework for calculating an AI test automation budget: How to model true cost drivers, define phased investment, anticipate infrastructure and training needs, and calculate ROI. Let’s make your next release well-executed and measurable.

The cost of testing today and why it’s rising

Nothing groundbreaking: software is becoming more complex and sophisticated. We know that the rule of thumb is to ensure at least a 50-50 balance between efforts spent on development and testing.

At the same time, manual QA demands human capital, the labor market experiences a significant shortage, and legacy automation clogs the delivery pipeline with maintenance work.

That’s why, without a fresh approach, QA is doomed to become a financial and operational bottleneck.

Manual QA is resource-heavy

Let’s hash out what you opt for when using manual testing. It requires hours of exploratory checks, regression walkthroughs, and repeated validation. And you need an old-hand pro, at least one for supervision (ideally a couple at different levels or your tech team).

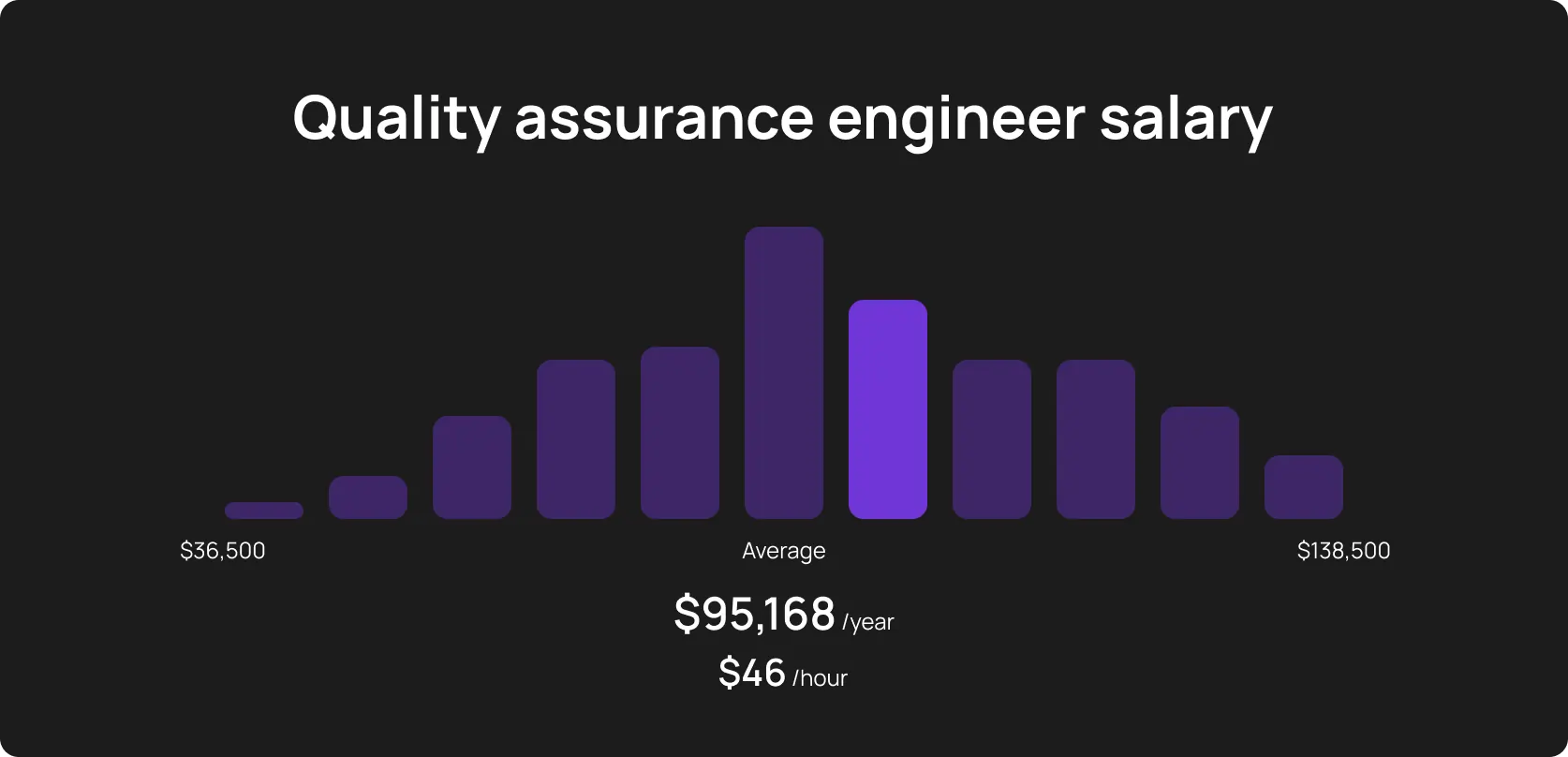

Rest assured, they are expensive. In the US, manual QAs earn an average of USD 95,846 per year, with senior roles often reaching USD 118,000+.

The American Bureau of Labor Statistics even puts their median annual salary at USD 131,450.

Western European roles range from EUR 60,000 to EUR 70,000 annually (we took Germany as a benchmark). In Poland, QA Engineers earn between USD 3K and USD 4,5K per month.

Total these numbers and project how much you will spend due to inefficiency and your fear of trying to level up your testing.

Legacy automation requires constant maintenance

This part stems from the previous one — traditional and affordable test automation (aka a scripted one) delivers only if maintained.

But just imagine your QA team members’ faces when they have to overwork with every UI tweak, DOM restructure, or minor change. These manual updates usually consume ~30% of the team’s bandwidth. This, eventually, drains the budget, weakens trust in automation, and makes the burnout epidemic in your company pretty likely.

Growing app complexity = growing QA costs

Modern software is microservices, multi-channel UIs, and international compliance, which amplifies the testing scope. This forces you, as a tech or management leader, to make your strategy flexible.

Each new integration or market expansion seriously increases the workload. QA teams end up reacting rather than testing proactively, and that reactive model doesn’t scale, neither financially nor operationally.

What goes into the budget for AI test automation

The best way to approach developing an AI test automation budget is to think of it as a multi-component mosaic. When you have a puzzle of 10,000 elements, chances are, you’ll assemble it in stages, not during a night-long sprint. The same with budgeting for QA automation. Below is a breakdown of items and less-obvious variables that often trip up CFOs and QA leads alike.

AI platform/tooling costs

Obvious. Tools may charge per seat, per test, or consumption-based (like credit/hour usage). Pricing models are still evolving: vendors like Microsoft started with per-user charges (something about USD 30/month for Copilot) but are shifting to consumption-based pricing as compute costs come into focus. OwlityAI’s credit-based model aligns spend with usage and, this way, optimizes future costs for businesses of different sizes.

Setup and onboarding

There’s also the effort to bring automation online: scanning your UI components, configuring test scope, and integrating with existing frameworks. OwlityAI acts as other really modern autonomous platforms: it minimizes the friction with autonomous scanning and computer-vision-driven test generation and reduces ramp-up from weeks to days.

Training and leveling up

New tools require new skills. It’s already difficult to imagine a proper tech team without a knack for prompt tuning, test review, and oversight of adaptive logic.

A common pitfall is that many leaders don’t include time for upskilling QA engineers (and the tech team as a whole) in training cost and AI test automation budget. A similar oversight is neglecting product stakeholders.

They should also understand AI’s outputs or at least its impact. Ignore this if you want to misalign AI-generated coverage and product priorities.

Ongoing usage and scaling

Quite logically, test executions increase in frequency alongside releases, cloud usage, and credit consumption. The budget must reflect this ramp-up over time and account for added integrations (adding new services, environments, etc.) that increase AI pipeline requirements.

Monitoring and optimization

Don’t rush, but do not delay measuring for too long, either. Once AI is live, ROI shows up. Teams must evaluate reports, triage flaky outcomes, and adjust prioritization logic.

This oversight costs QA and DevOps cycles. Top-tier AI tools have actionable dashboards and show a clear-cut helicopter view, reducing the mentioned load and manual analysis.

How to build a budget based on your QA maturity

Beginners have many proven frameworks for calculating a testing budget. But only the real ones know that budgeting for QA automation should match investments to your QA maturity level.

Not only are many companies unable to dispassionately assess their level of QA maturity, but they also forget to budget for the time spent defining acceptable failure thresholds, annotating AI-generated test cases, or training stakeholders to interpret AI insights.

These invisible and essential elements define what a high-ROI implementation actually is.

For manual-first teams

Your focus should be on making your quick wins visible, so we’d budget for AI-generated smoke tests or auto-generated regression flows.

What to include:

- Plugin licenses

- Setup time for scanning your UIs

- A small hackathon sprint (1 to 2 weeks) for validation

This helps prove value before scaling.

In practice: Set AI-aligned KPIs showcasing the reduction in manual test hours and allocate 10-15% of your QA sprint capacity to pilot with AI.

For teams with partial automation

Replace brittle, manual scripts with self-healing, AI-generated flows.

How to: Use autonomous testing tools to migrate your existing stable scripts, allow AI to detect locators dynamically, and maintain script clarity.

Budget for a 2-3 sprint migration cadence; include test validation and occasional overrides.

For mature teams

We assume you have already had success in quality assurance, maybe even using artificial intelligence. So, you can scale, keeping AI testing ROI in mind.

Integrate AI into CI/CD with risk-based test prioritization and restructure team roles: you no longer need classic testers, but QA Strategists.

Budget focus: Cloud credits for parallel execution, dashboards for KPI tracking, and possibly headcount reduction or reallocation rather than expansion.

Measuring ROI from AI test automation

As a company, you invest money, human and technical resources. As a leader, you invest attention, time, and effort. That’s why sometimes SMBs give up novel initiatives — they just don’t see acceptable returns.

Because they only take into account tangible things like money and team size fluctuation. Savvy leaders measure ROI in terms of release confidence, time, team morale, and input-output balance, not just money.

Time saved per regression cycle

But we can’t help admitting that time matters. Track actual reductions in regression runtime and compare pre-AI and post-AI stages.

Reducing regression from 40 hours to 8 hours per cycle multiplies the value when the release cadence increases. Also, monitor “time-to-feedback” for critical flows.

Hours of test maintenance eliminated

You have probably guessed this — automated frameworks also have their maintenance cost. To have a whole picture, measure how much time your team spends fixing tests per week. Compare the results pre-AI versus post-AI. If we take the entire testing process, teams can achieve up to 90% maintenance reduction with AI tools.

QA cost per release

Now, it’s time to calculate more overall and business-level metrics. Roll up labor, compute, license, and operational cost — you’ll have everything that impacted the release. This lets you compare ROI between manual, traditional automation, and AI QA per feature release.

Coverage and bug detection improvements

Quality improvement is another near-end goal of AI testing. We already told you to measure quality metrics (related to bug discovery) before starting AI implementation.

Now, it’s time to check the percentage of critical flows covered with stable and meaningful tests: Precision and Recall-like metrics will show defect capture. Ratio of pre-production detected defects to total defects. A 50-80% defect reduction is a strong win to advocate for autonomous testing at management meetings.

Budgeting mistakes to avoid

Why do you think we, as a tech industry, still don’t have an ideally effective and efficient tool for software testing? Google, Meta, Adobe — even tech monsters run into budgeting pitfalls, especially when it comes to underinvestigated areas.

But we still suggest test automation cost planning because this at least brings transparency about true cost drivers and maturity readiness. Here are the mistakes we see most often.

Sticking to legacy tools

AI-driven, modern tools are often incompatible with legacy test management systems. Even if they are compatible, the integration will consume more effort than planned.

Apart from unclear migration paths, there may also be incomplete APIs and inconsistent data models. It’s a good idea to include at least a 15-20% buffer in your AI test automation budget for compatibility and data harmonization.

Overinvesting in full-suite automation from day one

This is another edge of any novel endeavor. Over-efforts. Many companies want to ride the wave and claim themselves AI-first.

But the truth is that AI-driven end-to-end suites may backfire unless tuned properly. If giant corporations with all their resources and talents implement artificial intelligence gradually, what can SMBs expect?

Only budget burning, because without incremental validation, it’s very difficult to maintain unproven pipelines. Start with regression and smoke-test automation, then layer on advanced AI flows once you get tangible and acceptable results.

Ignoring the cost of not automating

Chasing affordable test automation, SMBs often forget to include non-automation in their calculations. Every hotfix, every manual rework, and every patch release are not necessarily visible… until the entire product breaks.

Teams often omit the “opportunity cost” of slower release cycles, and a mature AI test strategy considers both savings and revenue protected from late or buggy releases.

Tools with opaque pricing or hidden costs

We can’t help admitting that with all that AI hype, many vendors play on our emotions, offering affordability and “unique” features, but hiding the real costs in per-seat licensing, proprietary infrastructure, and metered API calls. An efficient and affordable test automation strategy can’t rely on non-transparency.

Bottom line

Sounds weird, but we find the golden fitness rule appropriate here: To start benefiting from AI software testing, you need financial discipline.

Think globally, act locally: Adopt artificial intelligence step by step, but with long-term scalability in your mind. This especially applies to budgeting. Properly built AI test automation budget accelerates delivery and keeps costs predictable.

One day, we all will see totally autonomous testing agents embedded directly into CI/CD pipelines, dynamically generating, executing, and running test suites. Of course, this won’t happen overnight, and you have some time. But teams that start now will capitalize first.

If you are on the fence about implementing autonomous testing due to AI testing ROI or any other reason, book a demo with OwlityAI’s team.

Let’s find a way to make your testing effective and affordable.

Monthly testing & QA content in your inbox

Get the latest product updates, news, and customer stories delivered directly to your inbox