Autonomous software testing is creating a lot of buzz. For good reason, indeed. And the reason isn’t a potential alone — rather a response to real-world failures. Let’s consider two different examples with a single outcome.

1/ Boeing 737 Max crashes: Software glitches contributed to two tragic accidents, which killed 346 people. The fallout cost Boeing at least USD 200 M at first and shook public trust in aviation safety.

2/ Uber self-driving car crash in Arizona, which, unfortunately, resulted in a pedestrian fatality, too. The National Transportation Safety Board's investigation revealed that the autonomous system failed to identify the pedestrian and didn't initiate emergency braking.

And how can we say that autonomous software testing is unimportant?

As more companies explore AI and machine learning in testing, myths and misconceptions abound. Some claim these tools will replace human testers entirely, while others dismiss them as glorified automation scripts. The truth? Probably, it is in between.

Without any further ado, we’re moving over to the current state of autonomous testing — what it can do, what it can't, and how it fits into modern QA strategies.

What is autonomous software testing?

Traditional software testing is when your development team has finished the coding iteration and assigned a ticket for testing — the key moment is that this happens after coding. Autonomous software testing occurs automatically and is akin to having a tireless, hyper-intelligent QA team that works 24/7 without coffee breaks.

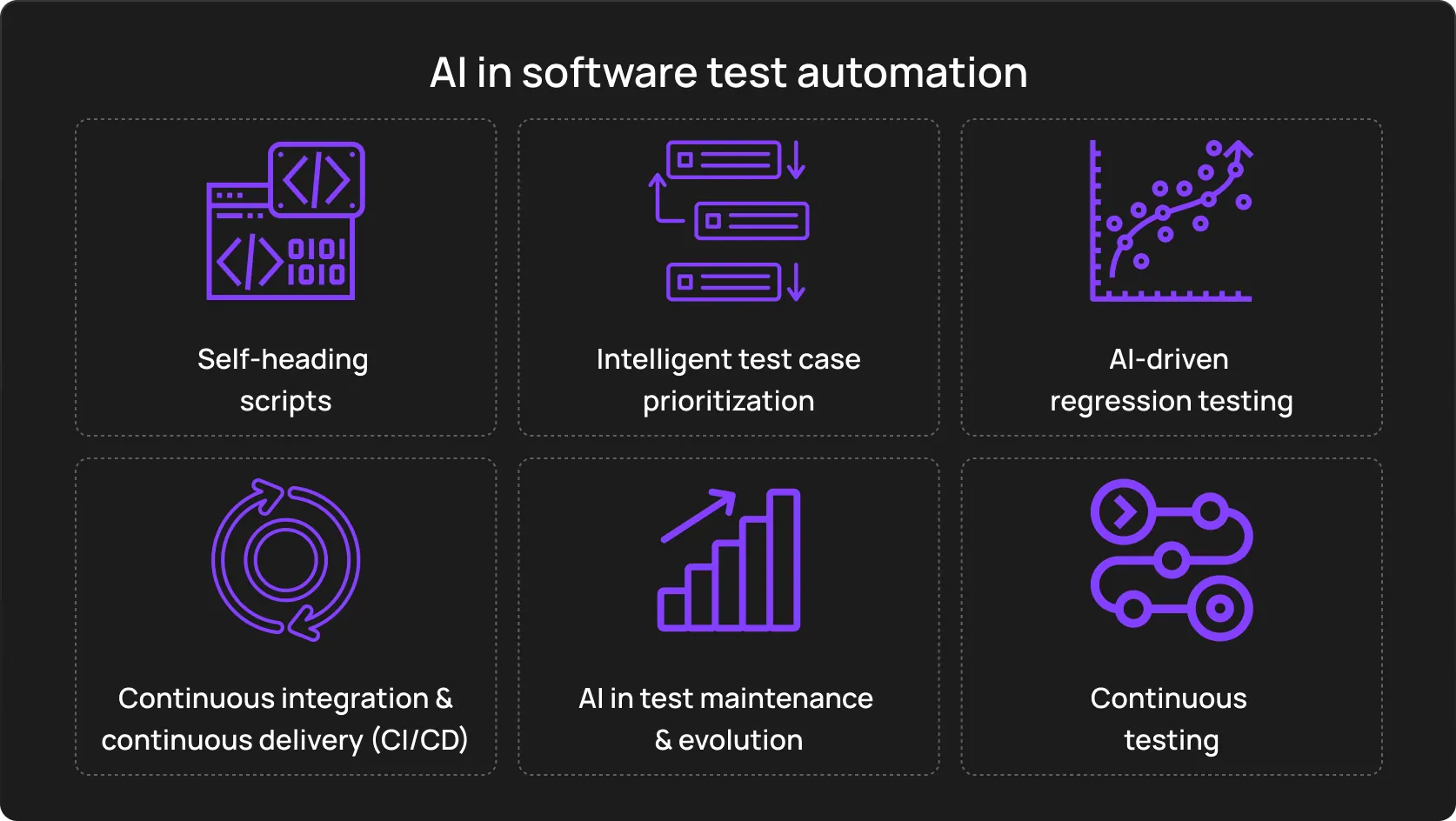

AI-powered testing system designs, executes, and analyzes tests without human intervention — like upgrading from a manual assembly line to a fully automated smart factory.

The technology under the hood is complex:

-

Machine Learning algorithms: They learn from past data, user behavior, and system logs to predict potential failure points and generate test cases.

-

Natural Language Processing (NLP): This one enables the system to understand and interact with applications imitating human behavior.

-

Computer Vision: This technology allows the testing system to identify, interpret and interact with graphical user interfaces and predict potential UI/UX issues.

-

Reinforcement Learning: The system continuously improves its testing strategies based on outcomes. The perk — it becomes more intelligent over time on its own.

-

Bayesian Networks: Probabilistic models that help the system reason about uncertainties and make decisions in complex, dynamic environments.

Key difference

Requires manual script writing and maintenance. Every time your software changes, these scripts need updating — a slow and error-prone process.

No need to update tests — fewer errors. The AI adapts automatically to changes in the software, keeping tests accurate and up-to-date without human input.

Common myths about autonomous testing

Myth 1: Total replacement for QA teams

Have you heard those complaints about global AI enslavement? Namely, Artificial Intelligence will save millions of specialists across all fields. People’s chain of thoughts usually looks like “If AI can handle testing, there’s no longer a need for human involvement.”

Reality: Autonomous testing tools don’t replace QA teams — they empower them. No need to burn out handling repetitive and time-consuming tasks, rather time to focus on strategic and potentially more impactful work. For example:

-

Heuristic analysis: QA experts apply cognitive heuristics to identify edge cases that AI might miss.

-

Ontological test design: Teams create comprehensive test taxonomies, mapping the entire domain of possible system behaviors.

-

Stochastic process modeling: QA pros model system behavior as stochastic processes to predict rare but critical failures.

-

Metamorphic testing: Explores non-obvious relationships between inputs and outputs.

AI in testing integrates with the entire cycle, but it’s the human insight that guides these systems, fine-tunes the algorithms, and interprets the results. So rather than eliminating QA roles, AT shifts their focus, making these roles even more crucial.

Myth 2: It’s just hype

Another software testing myth is that autonomous testing is just a passing trend, with no real staying power. Like this is a buzz that no need to pay long attention to.

Reality: AST is a far cry from hype — it’s a significant advancement in the field. As James Whittaker, a former Engineering Director at Google, once said, “Automation is the future, and those who don’t embrace it will be left behind”.

From finance to healthcare, we see many cases when teams have reduced testing times, improved accuracy, and achieved more efficient resource use by automating their testing efforts, which proves software testing trends are here to stay.

Myth 3: AST can test everything

Handling everything is a challenging task. Not only for humans, but for software as well. The belief about “jack of all trades” software suggests that once autonomous testing is in place, there’s no need for manual oversight.

Reality: While autonomous testing is incredibly powerful, it’s not a one-size-fits-all solution. There are still scenarios where human oversight is vital:

-

User experience testing: Understanding the subtleties of user interactions often requires human intuition and empathy, something AI currently lacks.

-

Complex business logic: Certain edge cases or complex workflows might not be fully understood by AI, necessitating human intervention to ensure all scenarios are covered.

Remember two fatal examples from the beginning of the article? Another proof that human intervention must take place.

And two more bit-sized myths.

Myth 4: Autonomous testing is 100% accurate

-

Like any tool, autonomous testing is not a pumpkin spice latte. It has flaws, like false positives or missing certain issues.

-

Teams should validate test results and continuously refine AI models to maintain accuracy.

Myth 5: It's too complex and costly for small teams

Autonomous testing is not a tech giant's prerogative. Modern tools like OwlityAI make it accessible to teams of all sizes. Bit-sized teams can leverage this technology to punch above their weight. Despite all crises, now is the time when small startups really can smash giants with state-of-the-art solutions (and a bit of courage).

You may also believe that autonomous testing is too costly to justify the investment. However, the long-run benefits of AT can far outweigh the initial costs.

Automating repetitive tasks and reducing the need for manual testing saves time and money on labor costs and infrastructure. Calculate the average rate of your current and potential stuff, and you’ll see that you looked for copper and found gold.

The real benefits of autonomous testing

Speed: Traditional testing methods take days or weeks to complete, depending on the project’s size. OwlityAI’s base function automates everything from test creation to execution. This way, you can release even 100 times a day, if you want, of course, as your development cycle will be shorter. Being able to test continuously without manual bottlenecks gives a significant competitive edge.

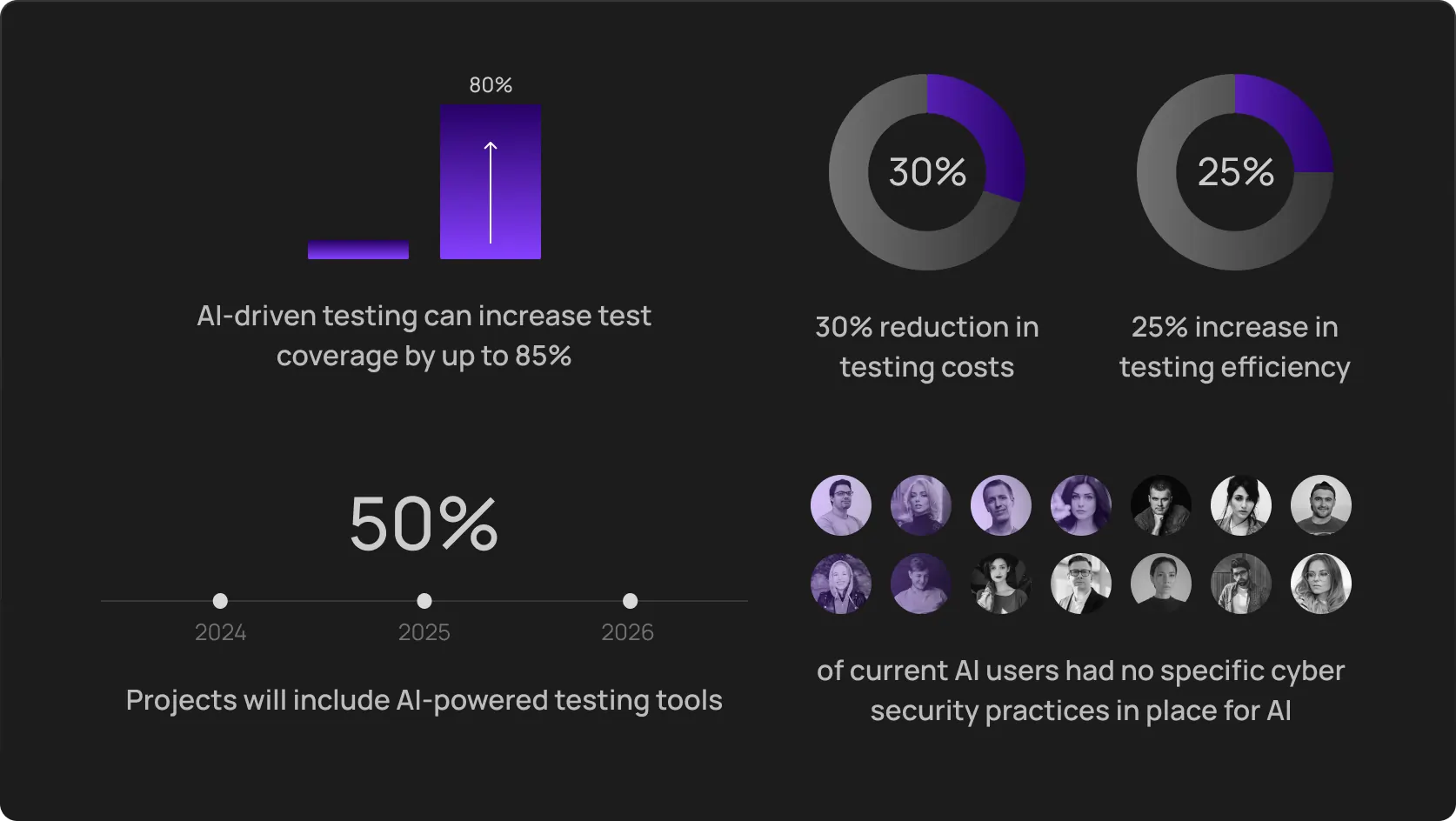

Accuracy: Even though we said above that autonomous testing isn’t 100% accurate, it’s drastically more accurate than humans, whose errors are almost inevitable in manual testing, especially when it comes to misinterpreted test results or skipped test cases. An autonomous test platform increases test coverage and ensures that even the most minor aspects of the software are covered as AI algorithms are capable of running hundreds or even thousands of test cases simultaneously.

Scalability: Traditional testing methods struggle to keep up with your project’s development, especially if this development is rapid. Autonomous testing, in turn, thrives in large-scale environments. Whether you're testing a small feature or an entire system, autonomous testing adapts to the project’s size and complexity. For businesses with ambitious software development goals, this scalability is invaluable.

Resource optimization: With autonomous testing, QA teams don’t need to spend hours on repetitive tasks like regression testing. Instead, these tasks are offloaded to AI, and specialists can focus on more strategic and creative aspects of quality assurance. Consequently — more efficient use of time, better allocation of skilled resources, and beloved cost savings.

Adaptability: Autonomous testing systems can continuously learn and adapt to changes in your software, ensuring that your test coverage remains up-to-date. This is particularly important in today's rapidly evolving software landscape.

Improved decision-making: Valuable insights into your software's quality and performance will help you make more informed decisions. In-depth test results analysis and identifying trends allow you to identify areas for improvement and optimize your development process.

Limitations of autonomous testing

Once again, nothing is ideal, and now it’s time to look at the flaws and limitations of autonomous software testing. It definitely offers tremendous benefits, but there are areas where it may not meet your expectations.

Not a complete replacement

While many are scared of “AI enslavement”, you may not be afraid of total replacement. Yet, autonomous testing is not supposed to fully replace manual testing…yet. It automates repetitive and routine tasks, but there are tests involving user experience or highly creative workflows, and human intuition is vital there.

Initial setup and learning curve

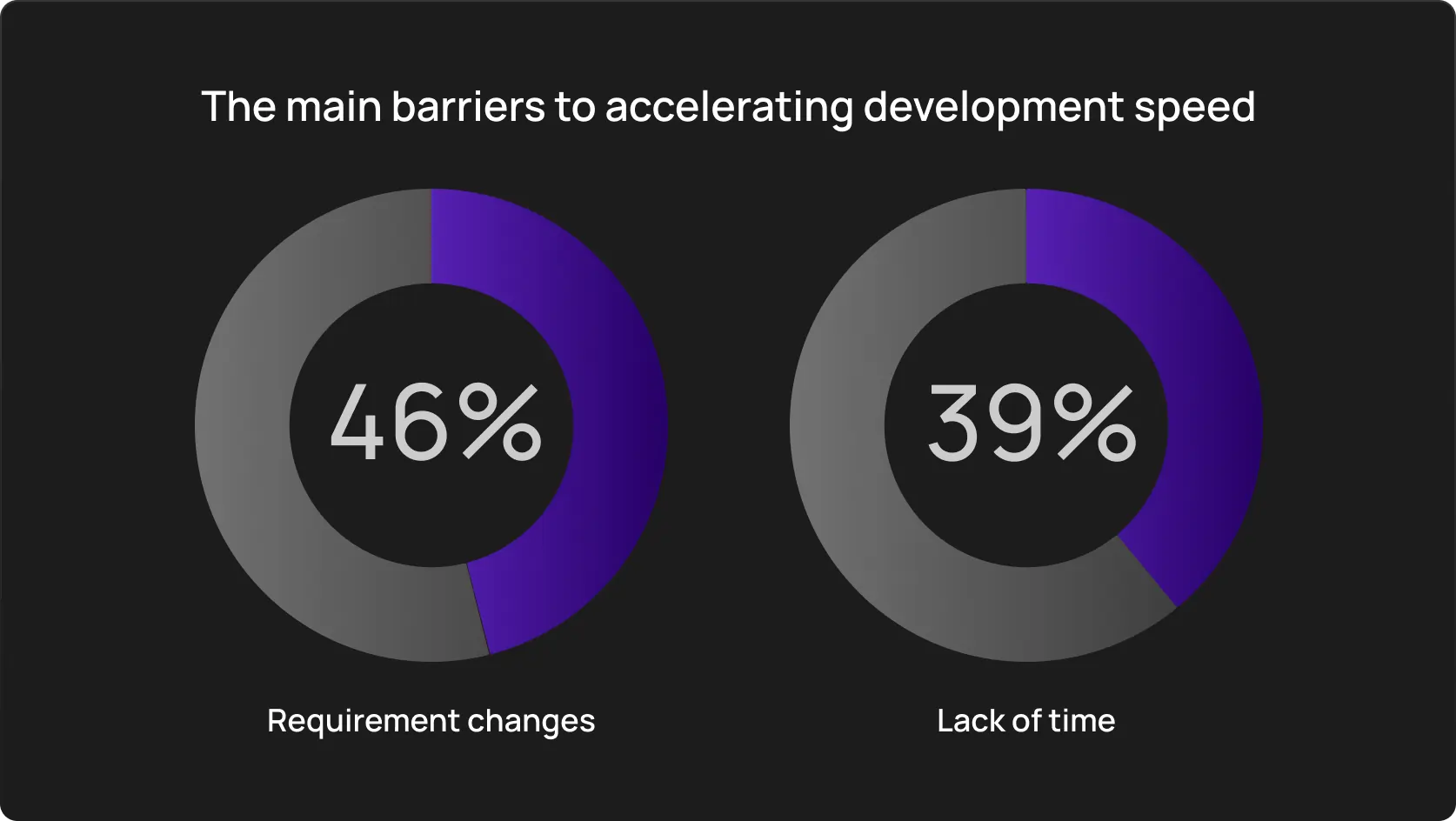

Don’t expect this to happen overnight. The initial setup requires a time investment. Your team needs to integrate the tools into existing workflows, define automation parameters, and train AI models to work effectively with your software.

As the icing on the cake, there’s a learning curve for the QA team. While machine learning testing simplifies many processes, it still requires effort to get everyone on board, especially for teams used to traditional testing methods.

Complex scenarios

Complex or creative scenarios form the space where humans thrive. Their flexibility, imagination, and understanding of user behavior take apart that AI. Artificial intelligence operates within the constraints of its programming and previous learning data and might miss nuanced edge cases or fail to test certain creative interactions.

How to successfully integrate autonomous testing

Successfully integrating autonomous testing into your software development process requires a strategic approach. Here are key steps to ensure a smooth and impactful transition:

1/ Your needs first

Start off a clear assessment of your current testing processes:

-

Identify bottlenecks: Where do manual tasks slow you down? Repetitive tests like regression testing are prime candidates for automation.

-

Spot areas for improvement: Look at areas with frequent errors or inefficiencies. These are where autonomous testing can bring the most value.

-

Assess resource allocation: Where is your team spending too much time? Automating those areas will free up valuable resources.

Key insight: There is no need to automate everything. Focus on areas where autonomy can solve specific problems — speed, accuracy, or scalability.

2/ Choose the right tool

When choosing, take into account the following:

-

East integration: This is your current tech stack and workflow that “decides” over integration matters.

-

Scalability: Tool should handle both small-scale projects and larger, more complex systems (for your future prospect).

-

Flexibility: Tools that allow customization and adaptation to various testing environments are ideal.

Why choose OwlityAI? Easy adoption, seamless integration. And minimal setup — just input your web app URL, and it generates a comprehensive test report without the need for manual scripting. The AI adapts to your software’s unique requirements, providing scalability and flexibility from day one.

3/ Training and support

Your team needs to understand not only how to use the tool but also how to interpret results and intervene when necessary. Ensure these facets:

-

Ongoing education: A one-time training session isn’t enough; the learning process must be continuous. This is the only way your QA team will stay current with new features and testing methodologies.

-

Support systems: Perfecto found that 72% of companies have improved performance in their automation processes when they had access to comprehensive training and support systems.

Key insight: The best tools come with strong user communities and accessible training materials to help teams stay engaged and knowledgeable over time.

4/ Start small

Dream of rolling out autonomous testing across your entire project portfolio at once? Great, yet it’s only a dream. This approach can overwhelm teams and systems. Consider a pilot project first.

-

Choose a less critical project that allows the team to focus on learning without the pressure of high stakes.

-

Track key metrics (speed, error reduction, resource savings, etc.) to understand the impact.

-

Use the pilot project to identify areas for improvement before expanding to more complex projects.

Key insight: Small steps allow you to iron out any challenges, set realistic expectations, and gradually increase confidence in autonomous testing.

5/ Continuous improvement

-

Regularly review and optimize your testing strategies.

-

Keep abreast of new features and capabilities in your chosen tool.

-

Encourage feedback from your QA team and developers.

-

Analyze performance metrics to identify areas for improvement.

Refine your approach. This way, you'll maximize the benefits of autonomous testing over time.

6/ Collaborate across teams

Before implementation, ensure buy-in from various stakeholders (QA, development, leaders, operation teams, and product specialists) and align them.

-

Developers need to understand how autonomous testing affects their workflow.

-

Product managers should align feature prioritization with testing capabilities.

-

Operations teams must be prepared for potential changes in deployment processes.

Arm yourself with regular cross-team meetings to address concerns and share successes.

Bottom line

You may think that autonomous software testing is a game-changer. And you will be near 100% accurate hit. Yet, it comes with its limitations — it excels in speeding up testing cycles, improving accuracy, and scaling for large projects, but it's not a complete replacement for human testers.

You will still need proper setup, training, and expectations management. The key is understanding where AI in testing fits into your development process and how it can complement manual efforts.

OwlityAI, our AI-powered testing tool, strikes the perfect balance between automation and human oversight. 3-step setup and start — OwlityAI adapts to your software’s unique needs.

Monthly testing & QA content in your inbox

Get the latest product updates, news, and customer stories delivered directly to your inbox